Sometimes it feels like remembering where we were just a few years ago is actually long past history. Certainly, in CyberSecurity, it can seem like that. This is especially true when new ideas emerge so quickly that current projects languish and eventually become lost in the pile of unmaintained code.

So why bring this up?

I’ve been very excited to review the progress of NIST as they develop standards for OSCAL. If you haven’t seen this yet, OSCAL is a language for facilitating the machine processing of compliance and security information. More specifically, it helps take the English words we use to develop our plans as to how we want to secure a computing system and makes it understandable by a computer.

The advantage of this is really important. English is artful and not well suited to either documenting how security controls are implemented or how to document them so that machines can understand them.

If you look at a NIST SP800-53r5 with 1,190 controls and remember how many different ways these are individually applied within a single computer, and how many computers are in a system, it can be daunting to think about creating a System Security Plan (SSP) that records all of this information and provides an auditor a path to validate those controls.

This is why we see SSPs that are hundreds of pages long, even for Moderate Baseline systems.

So the promise of OSCAL has been to make these documents machine native. By doing this, the computer is asked to understand and validate the individual controls for each computer in a system.

For those with any military background, this will sound amazingly familiar, like STIGing. STIGs are Security Technical Implementation Guides (STIG). The way STIGs get used is typically when a system administrator wants to ‘lock down’ a system to meet minimum standards, the STIG guides provide a list of security controls that should be followed. These are generally considered best practices and not always can they all be followed. What is relevant about STIGs is that they are current (relatively) and are available for many common operating systems and applications. So if you are building a Kubernetes system on RedHat, there are appropriate STIGs out there for you.

And this is what takes us back to SCAP. Like OSCAL and STIGs, SCAP is a government standard for security. SCAP stands for Security Content Automation Protocol and was first published by NIST as SP800-126 in November of 2009. You may be more familiar with SCAP from some of its sub-protocols, like Common Vulnerabilities and Exposures (CVE) which is used to enumerate vulnerabilities as they are discovered, or Common Vulnerability Scoring System (CVSS) which is used to determine the characteristics and severity of software vulnerabilities.

While these conventions are fairly common in the CyberSecurity community, it is less well known that there are actually nine sub-protocols in total within the SCAP specification. They deal with many aspects of how modern CyberSecurity tools function just below the surface, like Software Identification Tags (SWID) and Common Platform Enumeration (CPE) which are often used for asset inventory.

But our goal here is to understand the Common Configuration Enumeration (CCE). While it is somewhat obscure, the CCE was an amazing protocol for its time. The specific purpose of CCE is to create a set of details that can tell us if a control has been applied to a system or piece of software. This can be human control or machine control.

So, let’s use an example. If we start with the NIST SP800-53 control catalog, let’s say our goal is to implement protection for data at rest. Our primary control here would be SC-28 which says that we must “Implement cryptographic mechanisms to prevent unauthorized disclosure and modification of the following information at rest…”. We could also reference AC-3, AC-4, AC-6, AC-19, CA-7, CM-3, CM-5, CM-6, CP-9, MP-4, MP-5, PE-3, SC-8, SC-12, SC-13, SC-34, SI-3, SI-7, and SI-16 to impact this requirement. However, for simplicity, let’s say we have a Windows Laptop and we decide to implement BitLocker to encrypt the data-at-rest on the internal storage. We could implement a few Common Controls Enumerations (CCE)s to do this for us.

CCE-8278-4 Choose how BitLocker protected operating system drives can be recovered

CCE-8284-0 Configure BitLocker TPM platform validation profile

CCE-8299-0 Boot Manager Platform Configuration Register (aka PCR 10) by the TPM should be enabled

There are of course a few different controls here that we could implement and these are just a sample. But with each of these controls comes a registry entry that can be set or checked to ensure that the control is in place. So as a developer of an application or an operating system, it would seem interesting to create a catalog of these controls that users could invoke to secure their systems and that moreover, these lists would be useful when subsequently assessing if the system met specific standards, like those of a STIG.

Initially, we saw some great systems actually get developed from this and the remains of some of those systems still are with us today. Originally the Symantec Control Compliance Suite had the promise of being able to assess controls and vulnerabilities through the SCAP protocol and give us standardized language back as to how this could interact with other systems. The death and dismemberment of Symantec aside, these systems were promising for their time and could have heralded a new path for continuous compliance by allowing system operators to understand the security posture of their system against any known set of standards by mapping these control statements to their catalog of requirements.

Instead, we got a fragmented industry where vulnerabilities and controls are seen as two separate ideas, and assessment is difficult. Security controls have not been taken seriously as a compliance requirement. We see this today as extraordinary friction in the difficulty of assessing systems against their security controls.

To put this in context, within the Department of Defense, it is common for a FISMA System to receive its Authority to Operate (ATO) after the System Owner has created a System Security Plan (SSP), and that plan is reviewed and assessed by the Security Control Assessor (SCA) and approved by the Authorizing Official (AO). If this seems like a lot of acronyms, it is. Check out this FISMA Assessment and Authorization (A&A) Wiki for a quick read on how some of this works.

For each system that goes through this process, it isn’t uncommon for it to take 6 to 9 months, and that process must be repeated, usually, every 3 years to maintain its approval to operate.

For the system owner, this is difficult, expensive and takes a lot of time. What is more interesting about this is that the process tells us nothing about the state of the security of the system most of the time it is in operation. For example, a security control needs only be functioning while being assessed, which can be comfortably less than 5% of the time if the system administrator knows when they are being assessed.

Now system administrators are not trying to make systems less secure, but the manual assessment of a system is tedious and takes time away from actually operating the system. Resources what they are, this is a difficult ask and security can and does often slip from the day that it was assessed until the next time it is expected to be reassessed.

So this is where OSCAL comes to the table. The core value of OSCAL is that it should make it possible for assessments to be granular, automatic and pervasive. By moving the assessment of security controls into something that the computer can do against itself, or moreover, an automated system can know what to look for when evaluating other computers, the automation of this task is imminent.

Granular and pervasive because every component of the system would need to define its security controls at the local level and pass this information up through an OSCAL-based value chain to an assessment console.

So all of this is to say that OSCAL has a promising set of features that the community well needs in order to improve its security capabilities. But why discuss SCAP and STIG at all then?

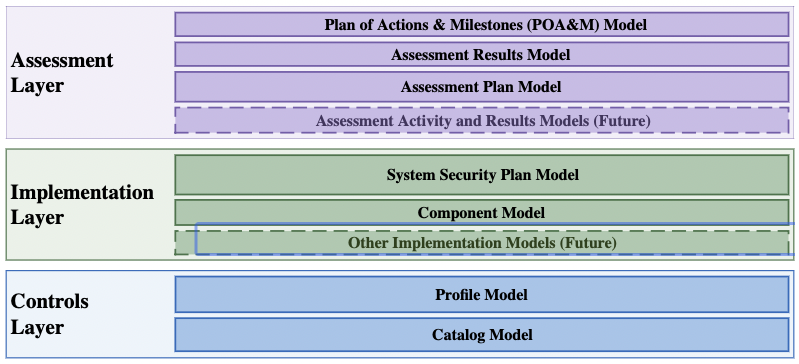

In the OSCAL protocol framework, there are three major layers.

The Control Layer is where we see the NIST SP800-53 Catalog. You can view the XML for this, FedRAMP, and other future artifacts on NIST’s GitHub page.

The Assessment Layer will most likely be delegated to a GRC tool, like a dashboard or reporting platform.

But the Implementation Layer is where the real action is.

From this layer, we need to see the specific Component Model and how it aggregates into a System Security Plan Model. To do this, we need a Component Catalog.

Think of the Component Catalog as a set of pointers that can tell us how any given security control would be implemented across any possible system. In an ideal world, we would need the developer of the system to create these, but it is also likely that many of these will have to be created on their own.

To create this catalog, there should be a standard framework for how to document these controls. While OSCAL gives us some standards here, it doesn’t go far into the specification as to how these controls are implemented or assessed; just the language for how that will be communicated.

This is where the SCAP CCE and STIG communities can come together to show a common cause for creating libraries of controls that can be consumed within the OSCAL framework.

What our industry needs is an assessment methodology and language for implementation that will allow for cross-platform commitment to developing, expressing, and auditing from this control set.

Remember, it isn’t enough to put our existing System Security Plans which are all derived from English as their primary language into XML. It is no more machine friendly than a Microsoft Word file in that context.

The revolution of OSCAL is in the ability to drive the real-time automated assessment of security controls through the OSCAL standard so that we can truly move into automated continuous assessment.